In a typical web application, it is a common requirement to make multiple HTTP requests in parallel. Although this seems to be straightforward in .NET since the introduction of async/await structure, it is easy to hit some problems when the number of parallel HTTP requests increases beyond some threshold. I will first try to address the problem with an example code, and then suggest my solution.

Making Parallel HTTP Requests – The Straightforward Way

It does not take a lot of effort to actually make parallel HTTP requests in .NET Core. All we have to do is to use some async/await magic.

public async Task<HttpResponseMessage[]> ParallelHttpRequests()

{

var tasks = new List<Task<HttpResponseMessage>>();

int numberOfRequests = 1000;

for (int i = 0; i < numberOfRequests; ++i)

{

tasks.Add(MakeRequestAsync(RandomWebsites.Uris[i]));

}

return await Task.WhenAll(tasks.ToArray());

}

private async Task<HttpResponseMessage> MakeRequestAsync(Uri uri)

{

try

{

using var httpClient = HttpClientFactory.Create();

return await httpClient.GetAsync(uri);

}

catch

{

// Ignore any exception to continue loading other URLs.

// You should definitely log the exception in a real

// life application.

return new HttpResponseMessage();

}

}

In this code example, we have for-loop in which we call MakeRequestAsync method of ours, and store tasks in a list. After that, we wait all tasks to finish. MakeRequestAsync method is so straightforward. We create an HttpClient object, and use GetAsync method on that object to send our request. Then, we return the resulting Task. In addition, we have a try/catch block here to prevent our system to fail completely even on a single failed request.

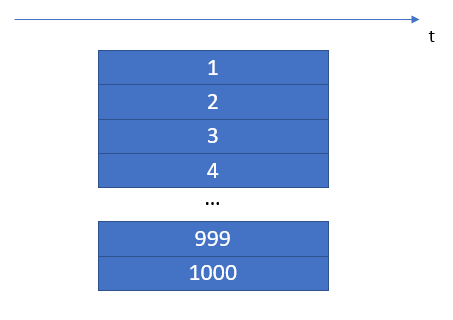

This code will easily make 1000 parallel requests in a console application running on your computer, since your computer is not a server currently serving hundreds of requests per second and has a horde of available ports and resources.

What if your code should run on a server with limited resources? Opening 1000 TCP sockets to make 1000 requests is actually not a good idea when the server has some other busy applications to run. It is easy to fill all of the available ports on the server and make all applications on that server suffer from the lack of necessary ports.

Making Parallel HTTP Requests in Batches

It is a good idea to limit the maximum amount of parallel HTTP requests somehow. Let’s try to make a total of 1000 requests in batches.

public async Task<HttpResponseMessage[]> ParallelHttpRequestsInBatches()

{

var tasks = new List<Task<HttpResponseMessage>>();

int numberOfRequests = 1000;

int batchSize = 100;

int batchCount = (int)Math.Ceiling((decimal)numberOfRequests / batchSize);

for (int i = 0; i < batchCount; ++i)

{

for (int j = 0; j < batchSize; ++j)

{

tasks.Add(MakeRequestAsync(RandomWebsites.Uris[i * batchSize + j]));

}

await Task.WhenAll(tasks.ToArray());

}

return await Task.WhenAll(tasks.ToArray());

}

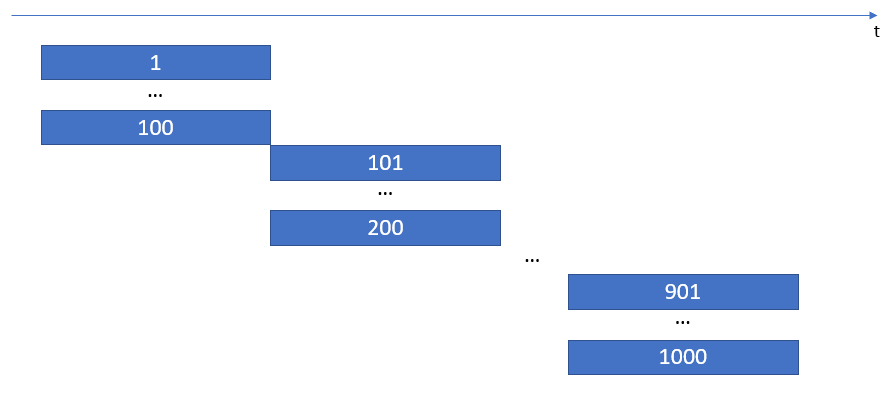

In this code example, first of all, we define some batch size and calculate a total batch count by using the total number of requests and the batch size. Then, we put 2 for-loops. On the first for-loop, we iterate for batch count. On the second for-loop, we iterate for batch size. This means that we make our HTTP requests for each URL until we fill our first batch, wait for them to finish, then we fill our second batch, wait for them to finish, then we fill our third batch, etc.

For this code, MakeRequestAsync method is no different than the first example, so I omitted that method here for brevity. Moreover, we need to make sure that we fill the last batch until there are still URLs we require making requests to (e.g. for 999 number of requests, the second for-loop must stop on 99th request, and not go for 100th request.). I also omitted that limitation here to make a clearer demonstration.

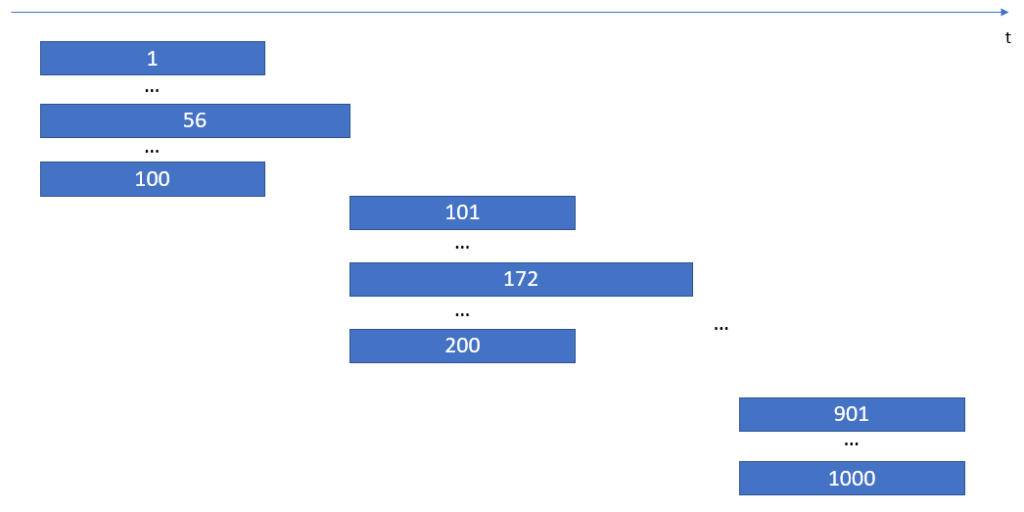

If you think on this code a little bit, you will encounter a design flaw which make the total time longer than intended: If a request takes longer than others, our code waits for it to be completed before going for the next batch.

Can we do something about it? Indeed, yes, we can!

SemaphoreSlim to Rescue

SemaphoreSlim is a class for limiting the number of threads which can access a certain portion of code. It is much like the Semaphore class if you heard it before, but more lightweight. We prefer to use SemaphoreSlim class here, because we don’t need the full functionality of Semaphore class.

Now, we can use it to make our parallel HTTP requests in a more elegant way.

public async Task<HttpResponseMessage[]> ParallelHttpRequestsInBatchesWithSemaphoreSlim()

{

var tasks = new List<Task<HttpResponseMessage>>();

int numberOfRequests = 1000;

int maxParallelRequests = 100;

var semaphoreSlim = new SemaphoreSlim(maxParallelRequests, maxParallelRequests);

for (int i = 0; i < numberOfRequests; ++i)

{

tasks.Add(MakeRequestWithSemaphoreSlimAsync(RandomWebsites.Uris[i], semaphoreSlim));

}

return await Task.WhenAll(tasks.ToArray());

}

private async Task<HttpResponseMessage> MakeRequestWithSemaphoreSlimAsync(Uri uri, SemaphoreSlim semaphoreSlim)

{

try

{

await semaphoreSlim.WaitAsync();

using var httpClient = HttpClientFactory.Create();

return await httpClient.GetAsync(uri);

}

catch

{

// Ignore any exception to continue loading other URLs.

// You should definitely log the exception in a real

// life application.

return new HttpResponseMessage();

}

finally

{

semaphoreSlim.Release();

}

}

This code is so similar to our first approach, but have some differences to limit the number of parallel HTTP requests. First of all, we create a new SemaphoreSlim instance with the maximum number of requests (i.e. 100). In addition, we pass this instance to our new MakeRequestWithSemaphoreSlimAsync method as a parameter.

When we look to the MakeRequestWithSemaphoreSlimAsync method, we see an interesting line:

await semaphoreSlim.WaitAsync();

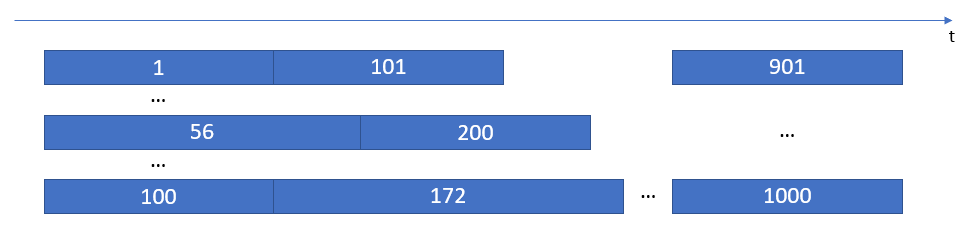

This is where the magic happens. When we call WaitAsync method of our semaphoreSlim instance, it checks if there is an available slot for it to continue the execution. If so, then our code goes on its way to create HttpClient and send request. When our code passes the WaitAsync method, the SemaphoreSlim class decreases the number of available slots by 1. For example, we had 100 available slots at the beginning (we passed the value 100 to SemaphoreSlim class when we instantiate it), and now we have 99 available slots. When another parallel thread runs this code, it decreases to 98. And for another one to 97, etc. When the counter reaches to 0, all other threads will wait on WaitAsync line asynchronously.

Our available slots reached to 0 now. But how does it increase again? We need to release some occupied slots for them to become available again.

semaphoreSlim.Release();

When a thread calls Release method of the semaphoreSlim object, it figuratively says “I have done with my work. Another thread can use this slot. I’m done with it.”. So, the call to Release method increases our counter by 1. This enables another thread to continue execution which waits on the line with WaitAsync line.

It is a bit hard to illustrate this for a thousand requests, but a naive figure could be like this:

I admit that the illustration is not perfect, but the main point in this figure is that our code don’t wait for all requests to finish before continuing to the next request.

Now You Know

When we code an application, we should always consider that the running environment has limited resources. We can use SemaphoreSlim to manage those resources in an elegant way. Making parallel HTTP requests is only one example for it. You can certainly use it whenever you need some parallel tasks in your application.